Life insurance and annuities companies have been around for a long time. Investopedia reports that the industries’ origins can be traced to Greek and Roman antiquity. Later, and closer to home, Benjamin Franklin urged a young United States to adopt best practices developed by famed insurer Lloyd’s of London for not only property and casualty hazards but also to safeguard Colonial America’s life insurance and annuity beneficiaries.

Since that time, the life insurance and annuity industries have collected and stored a staggering amount of data to protect the interests of customers and insurers alike as death claims are paid and annuities are disbursed.

While improving operational efficiency, increasing revenue, and reducing risk, gathering and storing big data also creates secondary challenges as the industry develops new capabilities. Generation of useful insights from this data requires additional effort, perhaps beyond organizations’ existing skill sets and budgets. In some instances, rather than focusing on resulting innovations, resources may instead focus on fixing data issues, particularly those involving wrangling and parsing.

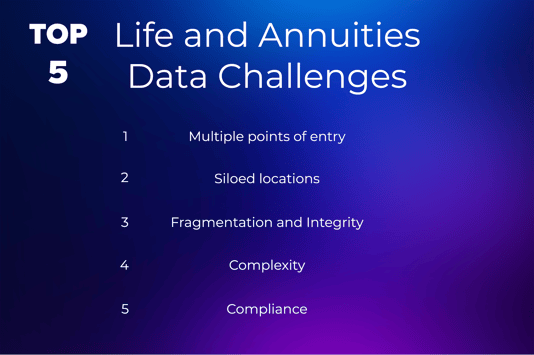

Additionally, without establishing parameters, users of that data also risk information overload, security concerns, inefficiency, and compliance exposure. That’s why any organizational best practices should include aggressively securing contracts with modern, secure external vendors to accelerate data-driven strategies and to help mitigate the following five challenges facing life insurance and annuity companies today:

1 – Multiple points of entry

Data is acquired through multiple channels

The numbers are staggering. The year 2020 saw unprecedented big data growth as carriers were forced to accelerate the development of effective business solutions to identify and capture pertinent data. Behavioral data, for example, can inform process improvements as well as underwriting and pricing decisions. To support the life insurance and annuity industries, information is received through organizations’ online and offline forms, call centers, self-service portals, applications, live chats, chatbots — and even faxes or snail mail.

2 – Siloed locations

Data resides in multiple, disconnected places

With an estimated 463 exabytes of data projected to be generated daily by humans by 2025, is it any wonder that organizations are scrambling to keep their data in check? Complicating this information glut is the unintentional duplication of data. Redundant and siloed storage proliferates when various organizational functions collect data for their own purposes without cross-referencing or integrating respective systems. Further, some departmental pockets within organizations are given strategic priority for platform modernization that includes data hygiene and architecture, particularly when the data is business-critical or serves acquisition and retention-driven revenue generators.

Face it: Legacy business administration and death claims systems are hard to modernize. Many carriers now have multiple layers of new custom-built technology targeting numerous product lines — current and closed — on top of legacy systems with unique standard operating procedures (SOPs), multi-state compliance, and federal-level regulations. Factor in the security risks associated with collecting, managing, and storing sensitive personal data, potentially exposing or compromising customer information. Lastly, organizations can experience costly, wasteful, and confusing data duplication.

3 – Fragmentation and integrity

Data attributes are fragmented and inconsistent across the organization

Systems, skill levels, automation levels, manual entry volumes, definitions, and data-use cases vary by department leading to discrepancies in data formats, quality, and breadth. Data and analytics professionals are forced to address data in a highly fragmented fashion. The result is a perpetual inability to integrate across departments and limitations to large-scale data management.

4 – Complexity

Product-level data creates delays and new layers of complexity

Many life insurance and annuity products are significantly complex, demanding programming and administration accuracy. Consider, for instance, a variable annuity with Guaranteed Minimum Death Benefit (GMDB) or with Guaranteed Lifetime Withdrawal Benefit (GLWB) riders attached. Associated multiple investment funds mean respective management fees may include insurance guarantees creating liability to the carrier based on fund performance, a fixed fund component with investments managed by the carrier, lapse/withdrawal consumer behavior prediction, annuitization options, and administrative/insurance fees charged by the carrier. Variables are pulled from myriad data sources and require strenuous scrutiny as death claims are processed.

Each product type typically requires a unique process for collection and management, as well as the use of financial and customer data. The common data constraints inherent to the industry that affect aggregating, cleaning, presenting, and applying create a delay in communication, reaction, and addressing of the issues or opportunities surfaced by the data.

This lag results in difficulty identifying the same customer or prospect across product lines and even preventing fraud in a time-efficient manner. Weeks, months, or years may pass between a life event and the time the organization is ready to act on it. Such lags can only lead to dwindling competitiveness.

5 – Compliance

Producing the necessary documentation to substantiate a contested death claim or payment in the age of big data is challenging. National- and state-level governing bodies have developed regulations to ensure timely and accurate payment of death claims and annuity payments. These compliance regulations protect not only customers but also life insurance and annuity companies against fraudulent claims.

How third-party vendors overcome these challenges

Customer expectations are increasing, and insurance companies are looking for ways to exceed them. As a result, there’s boundless opportunity for today’s life insurance and annuity carriers to innovate in the marketplace through smart technology that enables them to harness third-party and big data securely and effectively.

Such solutions should help carriers gain insight, solve problems, and reduce expenses – all while making operations more straightforward. In addition, third-party vendors can serve the role of data aggregators and have better documented as well as standardized Application Programming Interfaces (APIs) to access data. The advantages of standardized APIs are two-fold: vendors can improve data inflow and counter the costly effects of fragmented data formats.

With sophisticated data governance strategies, third-party vendors enable companies to tear down silos. Reliable vendors are usually better positioned to comply with more sophisticated governance and cataloging schemes due to their documented and standardized APIs and data organization. In addition, standardized APIs and data organization are generally system agnostic, allowing them to work with many legacy or new vendor systems to alleviate complexities.

Although the carrier is ultimately responsible for compliance adherence, experienced third-party vendors are well-versed in highly regulated industries, enabling them to focus on compliance on behalf of carriers. Their core functionality, built on modern systems that allow the third-party vendor to monitor and respond as regulations are created or amended, streamlines compliance functions.

Additionally, third-party vendors can access data that would otherwise be impermissible for carriers to obtain, such as data generated by other carriers.

What to look for in data vendors

Over the last few years, life insurance and annuity carriers have invested in external data usage to improve operational divisions such as underwriting and pricing. However, they may still be testing and learning to apply external data to marketing, servicing, and claims departments. Consequently, carriers must sort through an array of sources, evaluate hundreds of data types, and practice heavy experimentation to acquire the right vendor, the right service, and the right claims capabilities — at the right time.

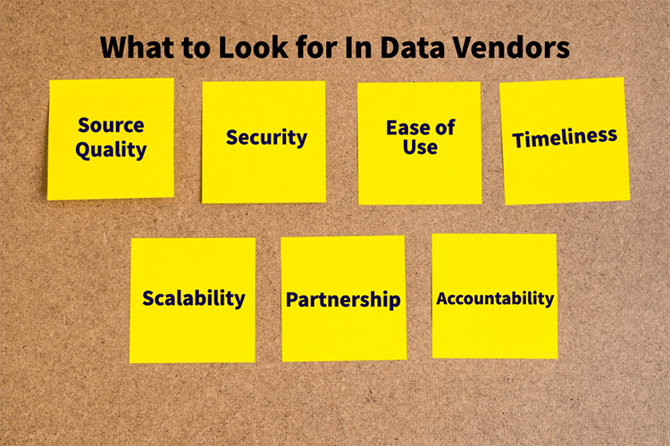

But there’s a way to streamline the selection process. First, finding the best fit for your organization should include an analysis of:

Source quality

If data quality within a source is low, even a large volume of data becomes meaningless. Similarly, sifting through poor-quality data wastes valuable organizational resources. Ascertain the quality of each source from which a vendor aggregates data to ensure high-quality results.

IT security

Data protection is crucial. When evaluating data vendors, your information security teams will undoubtedly want the most secure option for your data. Therefore, look for providers that make security a core competency.

Ease of use

Don’t forget to consider ease of use in your decision criteria. There is a definite cost for poorly designed onboarding and unnecessary noise in the data. Not only do results take longer to achieve, but inefficiencies create an undue strain on teams and processes: demand user-friendly capabilities.

Timeliness

The quicker you receive actionable data, the better. Consider the value of obtaining data faster and whether the vendor can provide reports at frequencies that fit your operational needs.

Scalability (future appends)

A vendor should adapt as the needs of their clients evolve, without issuing surprising upcharges or abandoning your business. You want a vendor that can handle your volume today and tomorrow so they can modify its deliverables as your needs change.

Partnership

Choose a partner who’s transparent about their pricing and processing steps so you don’t experience surprises after negotiations. The right partners are proponents of including all internal functions to ensure the entire organization sees the solution’s value and maximizes its use.

Adaptability

Beyond the product itself, the vendor providing it should be flexible and adaptable to work within the carriers’ pace, capabilities, and resources. Look for a provider that can adapt well to various life insurance and annuity carriers. Organizations have unique operational needs, and the right data solution provider understands the many nuances.

Harness the power of Evadata LENS to maximize third-party data

Evadata is an insurtech company endorsed by leading insurance and annuities carriers, which provides modern, scalable data solutions. Tailored products position carriers with the opportunity to improve customer experience, increase efficiency, and promote growth.

Evadata LENS is a solution that offers advanced and comprehensive match technology to analyze and evaluate multiple carrier death data against organizational records. Evadata LENS is the only service positioned to provide daily data on new and open claims, ensuring operational year-round efficiencies.

SIDEBAR:

Evadata LENS and the significance of death data to life insurance and annuities industries

Fundamental facets of life insurance and annuity procedures, such as premium past-due communications, unreported deaths, and dependency on beneficiaries to initiate claims require close monitoring of death data. For example, it’s vital to know which customers have passed away to initiate death benefits payments owed to a beneficiary and, in the case of annuities, prevent overpayments to deceased customers.

Cross-referencing death data with existing customer records requires a level of skillset sophistication and specialty not yet widespread in the life insurance and annuity industries. When internal data resources are earmarked for revenue-generating or transformational priorities that translate into years-long projects, proactively identifying unclaimed life insurance payouts may become secondary to the organization’s business model.

In the last few years, the number of deaths in the United States has averaged around 3 million per year — with multiple data sources relating to each death. Social Security number-privileged sources, vital state records, proprietary data sources, Death Master File, and broad coverage public sources like obituaries and funeral notices are all instrumental for tracking purposes but cumbersome to track.

Contracting with multiple data sources to receive, aggregate, parse, and match millions of deaths with customer records is challenging when performed by the life insurance or annuity organization itself. For most companies, it’s not an efficient or sustainable model.

That’s why life insurance and annuity organizations are increasingly exploring opportunities to improve death data reporting and to quantify the impact of their claims and annuity data. With Evadata LENS simplifying that process, your organization can focus its energy and resources on success.